Using third-party generative AI services requires transmitting user inputs to those providers for processing. That puts fourth-party Artificial Intelligence vendors squarely within the jurisdiction of your organization’s Vendor Risk Management program. In other words, when third parties share your data with fourth-party service providers, you need to know the data is handled in accordance with your security practices. The current state of AI regulation and adoption makes it all the more urgent to understand when those fourth-party vendors are providing AI software and or any other AI-powered service.

Risk appetites for AI systems vary widely between companies. We have heard from organizations that require no exposure to AI services and others that want to ensure they are realizing AI-enabled and machine learning productivity gains. These divergent security risk appetites mirror the high variance in how regulations apply by geography and industry.

The EU has already had an AI Act in force since August 2024. The US has a patchwork of existing and emerging state laws, with no federal regulation in sight. Australia’s policy approach is in progress. Whereas existing data privacy laws have focused on data types, AI regulation looks at decision making, creating greater regulatory focus for new industries.

As if a rapidly evolving risk environment weren’t challenging enough, AI adoption is also proliferating rapidly. As of the end of 2024, ChatGPT claimed over 300 million weekly active users and 1.3 million developer accounts.

In this report, we show that at least 30% of companies are using AI services to process user data. Thus, the urgency: regulatory requirements for AI technology are coming to a head at the same time that the amount of AI usage in the software supply chain is increasing, creating a ticking time bomb for those who ignore it. As AI usages grows unchecked, the supply chain risks they create will continue compounding.

This report provides specific examples of how AI can be detected in the supply chain, the frequency of different types of use, and how to incorporate it into your Vendor Risk Management program.

Collecting evidence of AI usage

The UpGuard platform collects evidence by scanning public websites for technical information by gathering security documentation from vendors—data that could also be used to highlight supply chain risks in vendor risk assessments. This report uses data collected by scanning websites for third-party code and dependencies and by crawling websites for public data subprocessor pages.

We used data collected for the 250 most commonly monitored vendors in the UpGuard platform to best represent the impacts these issues can have on the real-world supply chain ecosystem.

AI vendors visible via external scanning

Websites reveal part of their software supply chain by using scripts hosted on third-party vendor domains. Those domains can be associated with vendors, which can, in turn, be classified as AI vendors based on their services.

Across the 250 most used vendors, 36 (14%) had websites configured to run code from a third-party vendor providing AI services.

.png)

Embedding scripts on a website has long been a way to deploy predictive marketing analytics tools quickly. Amongst the AI vendors detected this way, there are still many analytics tools, but the hallmark capability of generative AI is right in the name of ChatGPT.

Chat agents are the most common type of AI capability deployed via third-party code. The function of a chat agent speaks to the kind of data likely being passed to the AI fourth party: sales and support inquiries. If a fouth-party AI vendor is compromised, chat agents could serve as attack vectors exposing the software supply chain to supply chain attacks or malware injections.

AI vendors detected using subprocessor disclosures

When your company’s third-party providers share personal datasets with fourth parties, those fourth parties become what GDPR calls a “data subprocessor.” A typical example would be cloud hosting services. You give your payroll vendor your employees’ information, and they store it in a database hosted in AWS, and now AWS is a subprocessor of your data. GDPR-compliant companies must disclose their data subprocessors to their customers.

Many companies voluntarily make their data subprocessor lists public. Out of the 250 companies in this survey, UpGuard researchers identified public subprocessor pages for 147. The other companies almost certainly have data subprocessors but elect to disclose that information only on demand.

There is no standard structure for data subprocessor pages, creating challenges for automated data collection and continuous monitoring. The most typical implementation of a subprocessor page is an HTML table, though the number and labeling of columns in that table vary between companies.

The information can also be in PDFs or other embedded documents that, again, have arbitrary structures. Large companies might have different subprocessors for different products and regions. These factors make confident automated analysis of subprocessor pages possible for most but not all instances. Out of the 147 pages, 119 could be analyzed programmatically.

Because LLM solutions like “OpenAI” and “Anthropic” have unique vendor names, subprocessor pages could be confidently searched to determine whether these companies were listed as subprocessors. “Gemini” and “Vertex” were used to identify Google AI services.

No false positives or validation errors were discovered when manually verifying the results generated by automated analysis. We omitted results for Microsoft AI services because there were relatively few results in our exploration, and Microsoft AI services were often also identified as OpenAI delivered through Azure.

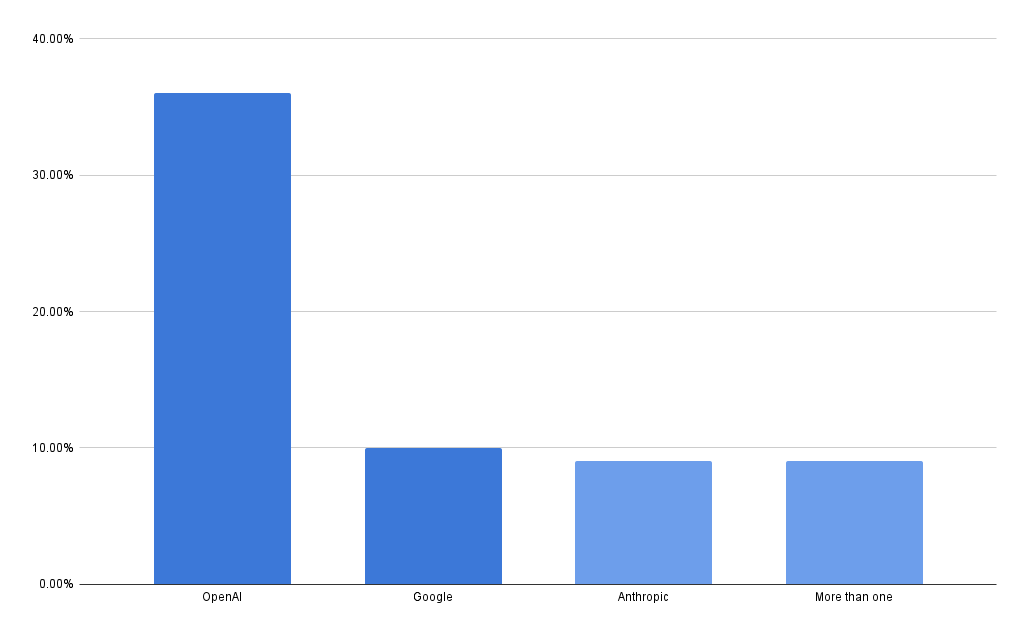

Out of 147 subprocessor pages, 36% of companies listed OpenAI as a data subprocessor, 10% listed Google Gemini or Vertex, and 9% listed Anthropic.

Whereas the chatbots embedded in internet-facing webpages are distributed amongst many small companies, the AI model services used by backend systems for processing production data are highly concentrated in a handful of vendors, most notably in OpenAI.

Over one-third of the companies analyzed (53 out of 147) are processing personal data with OpenAI. That is a very conservative measurement of OpenAI usage– it is likely more companies are using them in ways that do not require disclosure as a data subprocessor.

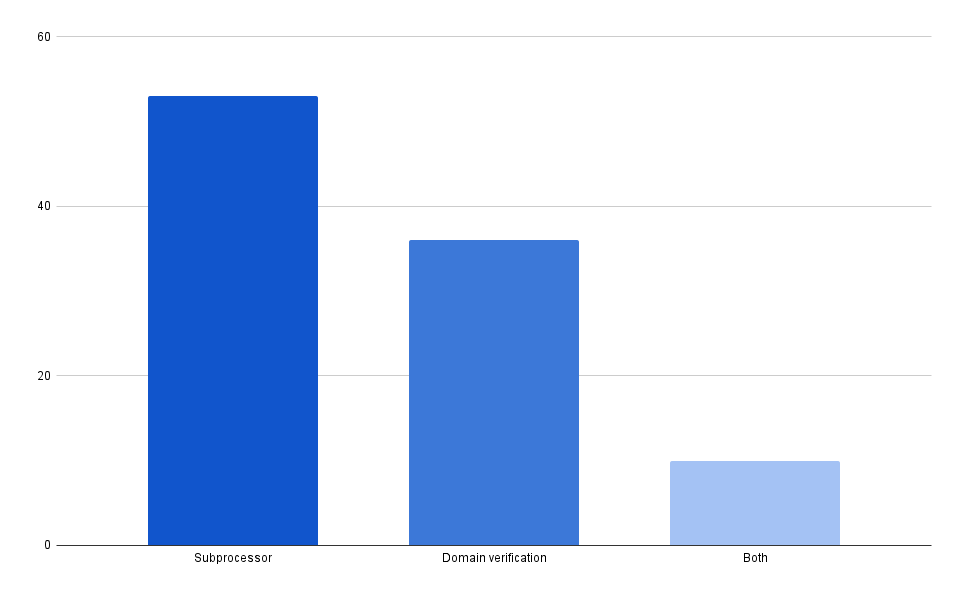

Interestingly, OpenAI also allows users to publish custom GPTs in the ChatGPT store. To prevent brand impersonation cyber threats, companies must add a DNS record to their domain to prove they own it. DNS records are another threat intelligence source that UpGuard scans, making it easy to determine which companies had OpenAI domain verification records.

There is no meaningful correlation between the two kinds of OpenAI usage– 53 companies use OpenAI as a subprocessor, 36 have verified their domain for publishing custom GPTs, and only 10 companies have done both.

How to use this in your VRM program

Hearing that vendors are, most likely, passing your company's personal data to OpenAI sounds alarming. And if you’re not currently incorporating that information into your Vendor Risk Management program or cybersecurity risk assessments, you should be. However, the point of data subprocessor disclosures is that by knowing who processes your data, security teams can accurately assess whether they are potential vulnerabilities impacting your security posture.

In the case of OpenAI, which UpGuard uses, the terms of service for the Enterprise and Platform plans ensure that information submitted to OpenAI is not used for training data models. Add in their other documented security measurements to keep that data confidential; the risk is comparable to that of cloud hosting providers.

By documenting OpenAI as a data subprocessor and collecting evidence from OpenAI that shows it does not use data for training, we can effectively support remediation of the risk it poses as a data subprocessor. You should be able to follow that process for all your vendors; they should disclose their AI supply chain and whether they use input data for training models or AI development.

Vendors may also levergae AI in their development processes, embedding it into the software development lifecycle. Monitoring AI development pipelines ensures alignment with your ideal AI security practices.

Ultimately, documented data subprocessors should be a layup for vendor management and procurement. If a fourth party like OpenAI is listed as a subprocessor, everyone involved—you, your vendor, and OpenAI—knows their privacy policies are being assessed. This clarity also strengthens incident responses, enabling rapid action if a fourth-party AI breach occurs, minimizing downstream impacts.

This information can be collected by visiting the websites of each of your vendors and locating the subprocessor's page or by using a vendor risk automation platform like UpGuard that centralizes evidence collection. Shadow AI requires multiple detection strategies, but automated detection of fourth parties via website code scanning provides an easy place to get started.

Security solutions like UpGuard ethically leverage AI technology to streamline workflows and expedite security issue management, creating real-time awareness of third-party software supply chain security risks.

Watch this video for an overview of UpGuard’s unique approach to efficiently managing third-party security vulnerabilities.